We create computational systems for discovery and translation in Autism Spectrum Disorder.

-

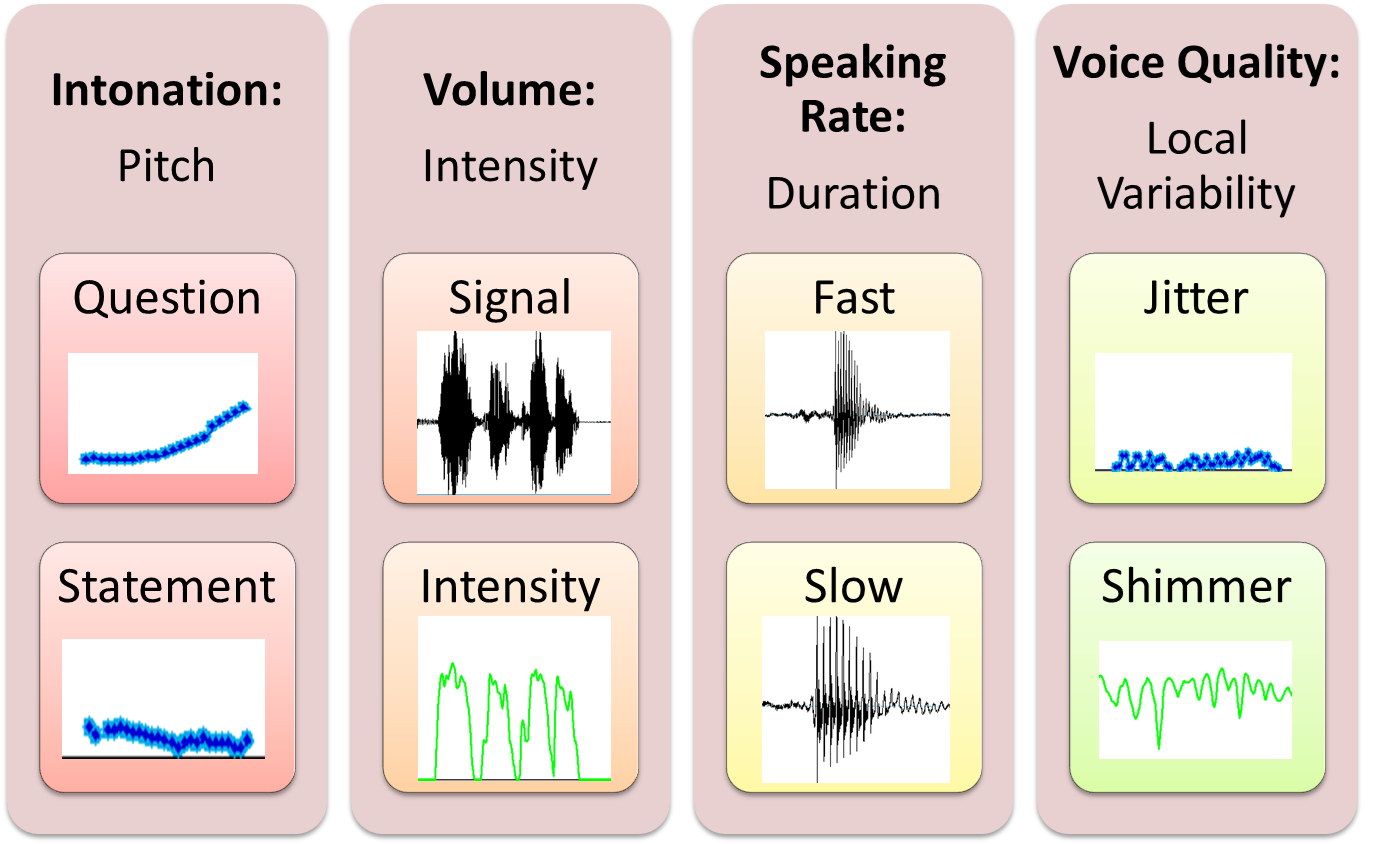

We quantify vocal cues such as prosody, which is often "atypical" in Autism Spectrum Disorders.

We quantify vocal cues such as prosody, which is often "atypical" in Autism Spectrum Disorders.

-

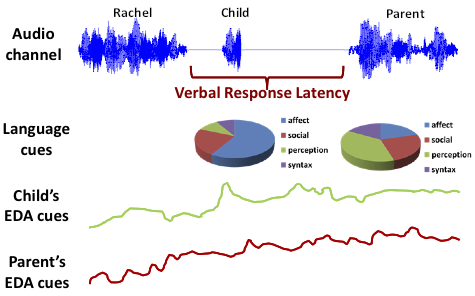

We develop knowledge-driven methods to represent electrodermal activity (EDA), a psychophysiological signal linked to stress, affect, and cognition.

We develop knowledge-driven methods to represent electrodermal activity (EDA), a psychophysiological signal linked to stress, affect, and cognition.

-

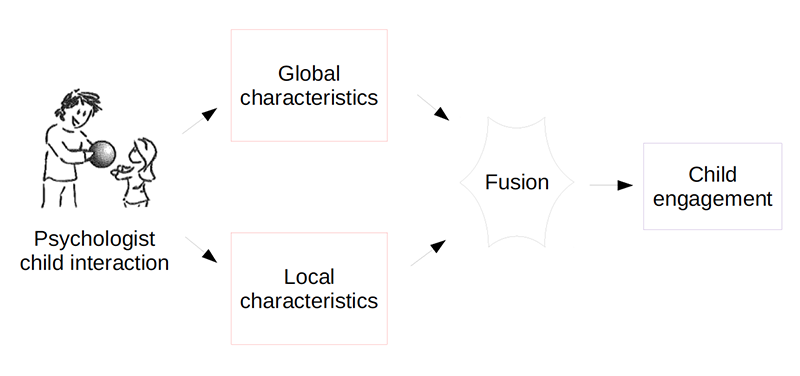

We automatically rate engagement from vocal cues.

We automatically rate engagement from vocal cues.

-

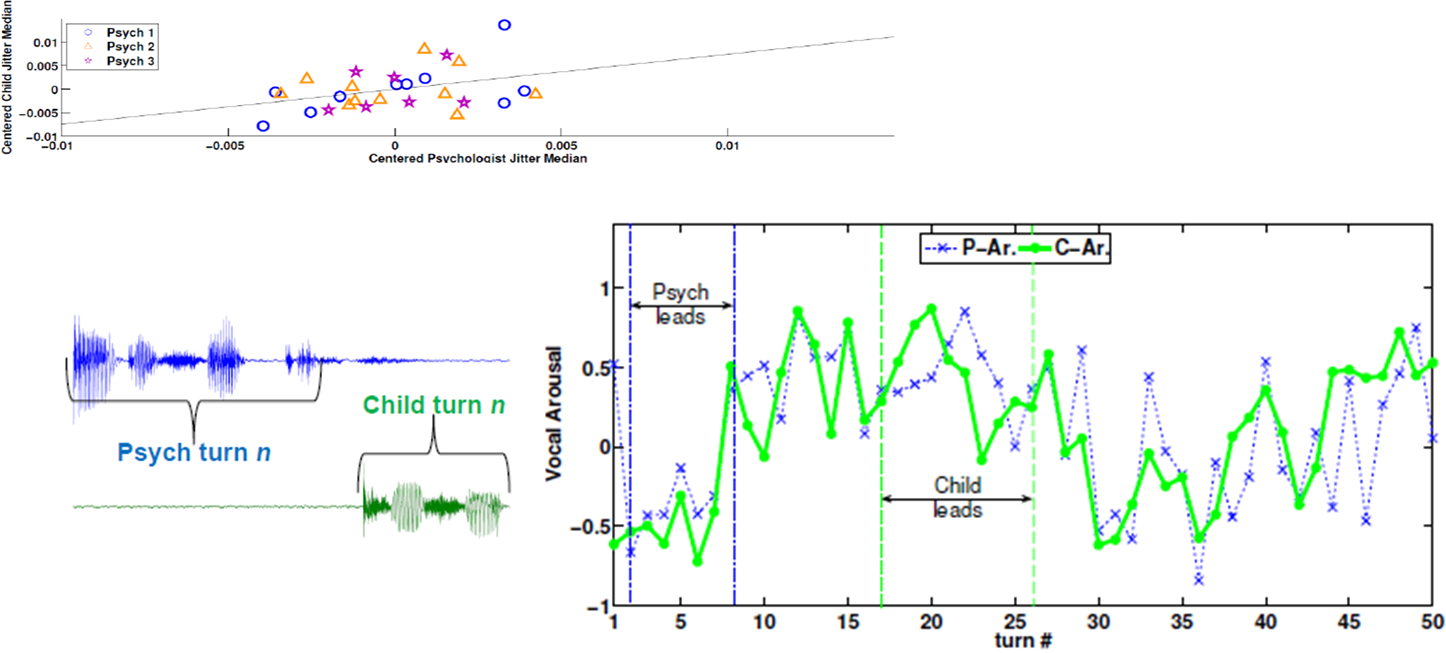

We analyze the mutual influence between participants (i.e., child and psychologist) through prosodic and affective signals.

We analyze the mutual influence between participants (i.e., child and psychologist) through prosodic and affective signals.

-

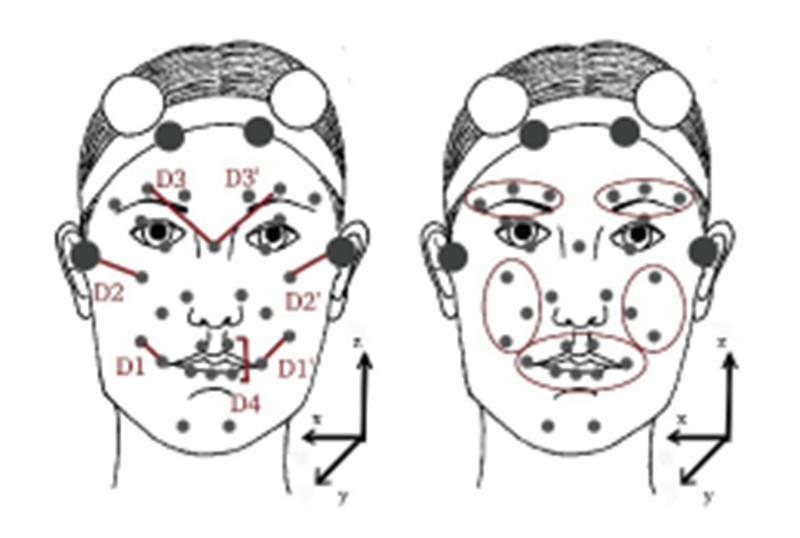

We analyze dynamics and symmetry of affective facial expressions.

We analyze dynamics and symmetry of affective facial expressions.