|

|

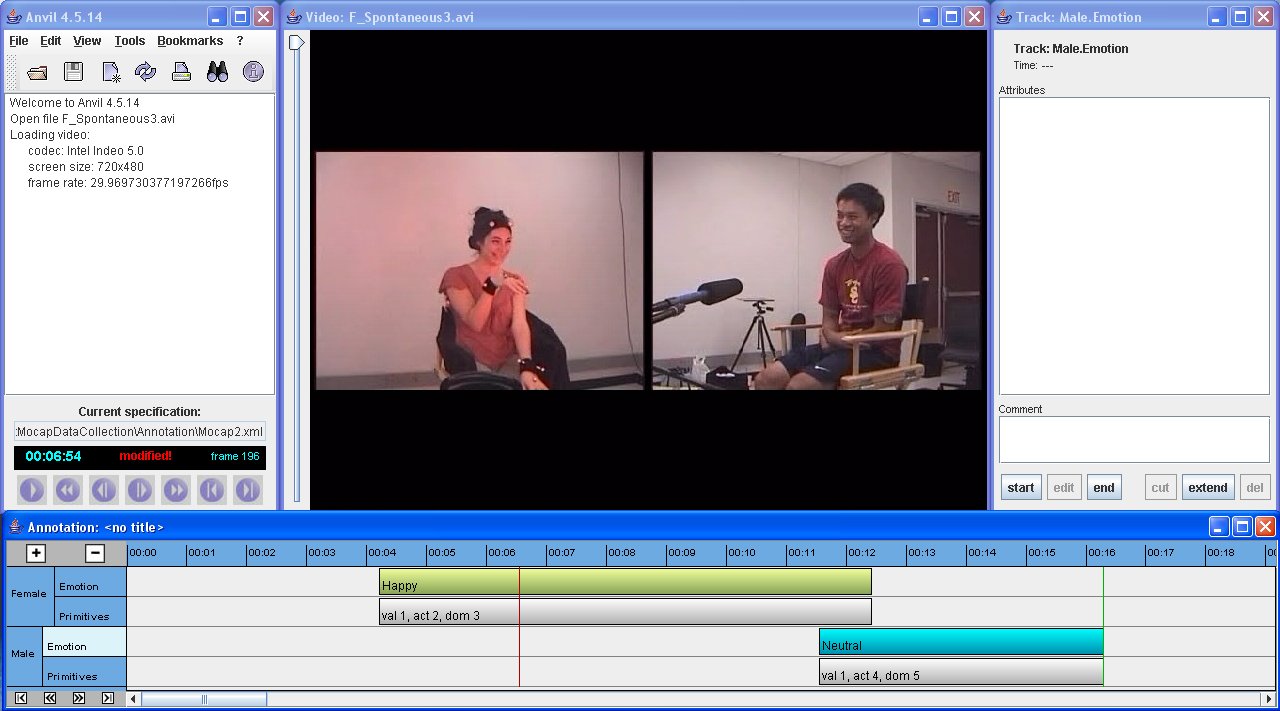

Scope of the Database

-

Recognition and Analysis of Emotional Expression

-

Analysis of Human Dyadic Interactions

-

Design of Emotion-Sensitive Human Computer Interfaces and Virtual Agents

-

...

General Information

-

Keywords: Emotional, Multimodal, Acted, Dyadic

-

Language: English

-

10 Actors: 5 male and 5 female

-

Emotion Elicitation Techniques: Improvisations and Scripts

Availabe Modalities

-

Motion Capture Face Information

-

Speech

-

Videos

-

Head Movement and Head Angle Information

-

Dialog Transcriptions

-

Word level, Syllable level and Phoneme level alignment

Annotations

Detailed Description

-

C. Busso, M. Bulut, C. Lee, A. Kazemzadeh, E. Mower, S. Kim, J. Chang, S. Lee, and S. Narayanan, "IEMOCAP: Interactive emotional dyadic motion capture database," Journal of Language Resources and Evaluation, vol. 42, no. 4, pp. 335-359, December 2008. ( download pdf)

|

|

|

(c) 2004 Speech Analysis & Interpretation

Laboratory

3710 S. McClintock Ave, RTH 320

Los Angeles, CA 90089, U.S.A

|

|